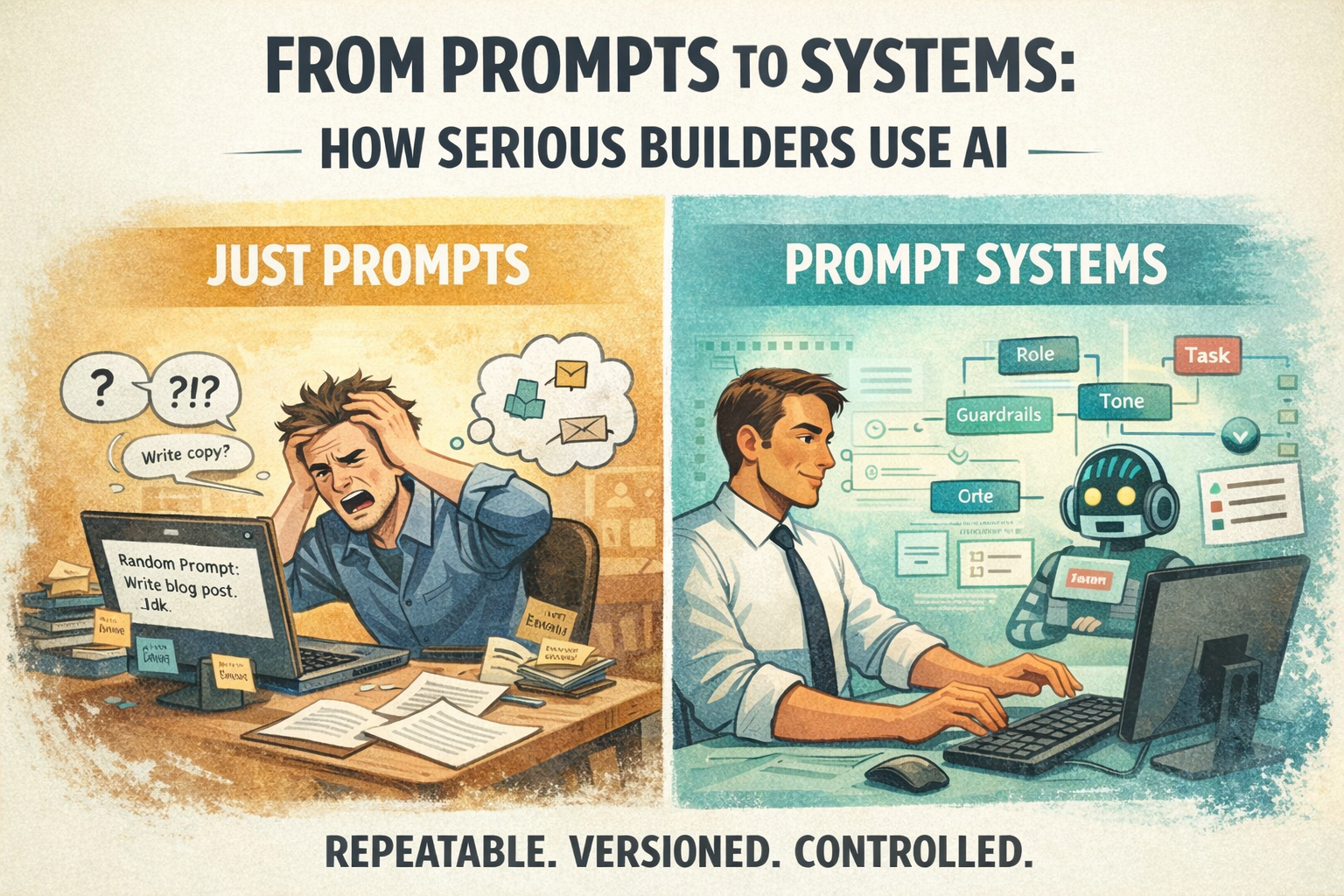

From Prompts to Systems: How Serious Builders Use AI Without Losing Their Minds

The most dangerous thing about AI is not hallucinations.

It is false confidence.

A single good answer can trick you into thinking you have built something stable. You paste the same prompt into another chat and suddenly the tone changes. The structure drifts. The logic feels softer. The model answers like it forgot who it was five minutes ago.

Nothing is broken.

You simply discovered that you were not using a system. You were relying on a lucky message.

This is the moment builders either abandon AI or become prompt engineers.

Prompts Are Not Messages Once They Are Reused

The first time you type a prompt, it is a message.

The second time, it becomes a process.

The third time, it is infrastructure.

The moment other people depend on it, your prompt becomes a contract.

It controls tone.

It controls decisions.

It controls what “correct” means inside your product.

That makes it production logic, even if it still looks like English.

And production logic needs architecture.

The Anatomy of a Real Prompt System

Serious prompt systems are not clever paragraphs. They are structured assemblies of behavior.

They usually include:

- A defined identity for the model

- Reasoning rules that govern how it thinks

- Output formats that make results usable

- Guardrails that prevent drift

- A task specification that never changes

- Optional clarification and retry logic

Each layer does a different job. Each one can evolve without breaking the others.

This is how prompts stop being fragile.

The Hidden Cost of Prompt Drift

Prompt drift happens quietly.

A developer tweaks wording.

Someone adds a sentence.

Another person copy pastes a variation.

Suddenly you have four versions doing “the same thing.”

Your AI begins to feel inconsistent.

Your team blames the model.

The real problem is that your system lost a single source of truth.

Prompt drift is not a style issue. It is operational decay.

Why Vaulted Prompts Exists

Vaulted Prompts treats prompt systems as living infrastructure.

It gives them version history.

It gives them lineage.

It gives them ownership.

It gives them a place to evolve safely.

When your prompt systems live inside Vaulted Prompts, you are no longer guessing which version is real. You know.

That is the difference between experimenting and operating.

The Hard Truth

If your prompts are scattered across chats, documents, and memory, you do not have AI systems.

You have accidents that happened to work.

Accidents do not scale.