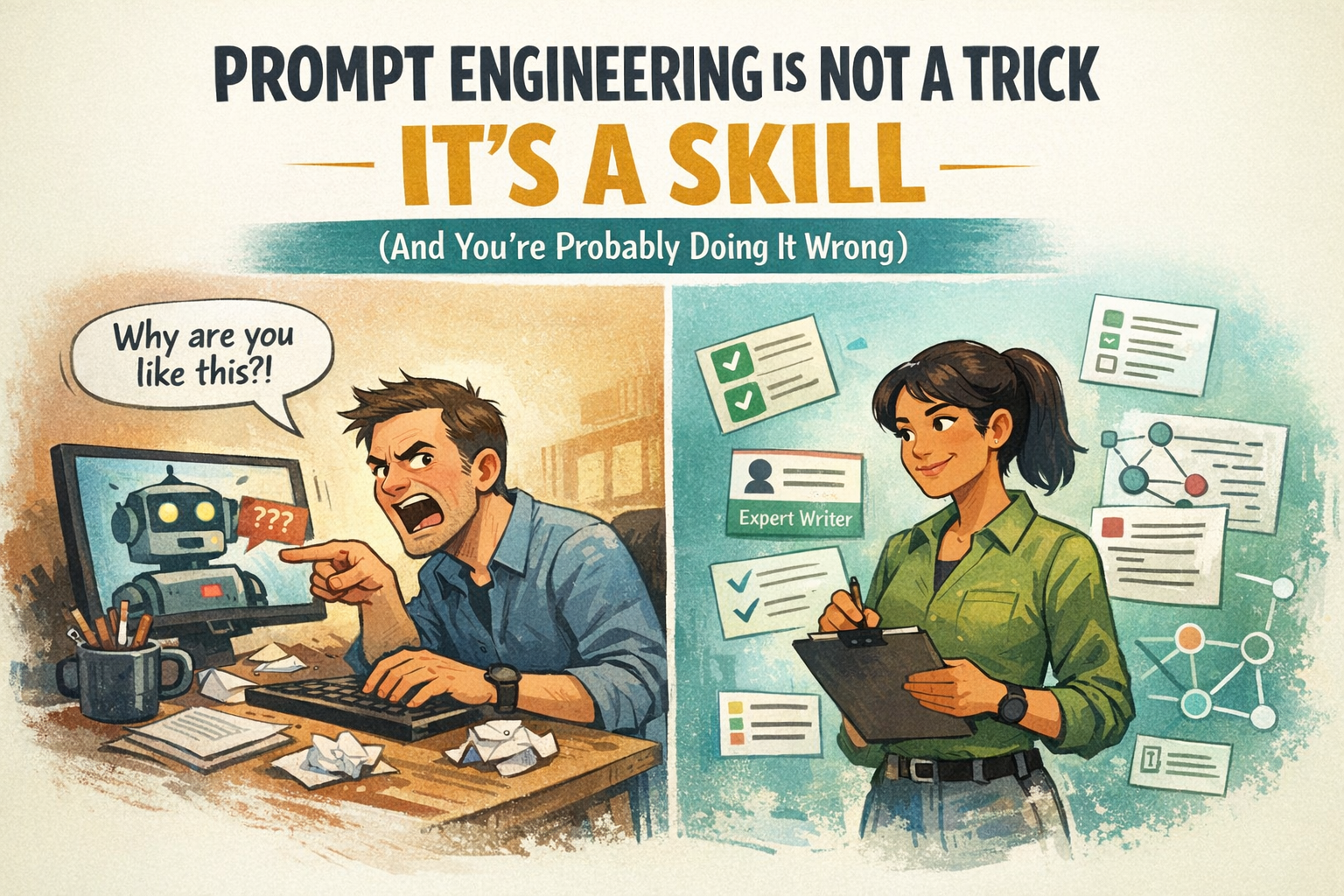

Prompt Engineering Is Not a Trick, It Is a Skill (And You Are Probably Doing It Wrong)

Most people meet AI the same way.

You type a sentence.

It gives you something impressive.

You stare at the screen for a second longer than normal.

Then you immediately start asking it to do your job.

For a brief and dangerous moment, everything feels easy.

The machine sounds confident.

The answers feel helpful.

You start trusting it.

Then the cracks show up.

One day the tone feels wrong. Another day the format is off. A task that worked perfectly last week suddenly produces something strange. You find yourself rewriting the same request over and over, wondering if the model is tired, distracted, or quietly annoyed with you.

Nothing is broken. You simply reached the point where luck stops working.

Prompt Engineering Is Not About Asking Better Questions

Prompt engineering is not the art of being polite to robots.

It is not clever wording.

It is not a collection of magic phrases from Twitter threads.

Prompt engineering is the practice of designing structured language systems that control how a model behaves.

It includes:

• Defining identities

• Controlling reasoning style

• Enforcing output formats

• Adding behavioral constraints

• Designing repeatable workflows

You are not chatting with the model. You are programming a probabilistic system using natural language. That is why vague prompts produce vague answers and chaotic prompts produce chaotic behavior.

This is also why improvisation stops working the moment you try to rely on AI for anything real.

The Wall Everyone Hits

Almost every serious user runs into the same problem.

A prompt works once.

It works twice.

Then it stops working the way you remember.

You know you wrote something better before. You just cannot find it.

Your best prompts are scattered across:

• Chat histories

• Notes apps

• Old documents

• Random text files

• Conversations you will never scroll back to

Your prompt library is not a system. It is digital archaeology.

And archaeology does not scale.

Prompts Become Infrastructure Faster Than You Think

The moment a prompt is reused, it stops being a message and becomes infrastructure.

It defines how your company writes.

It defines how your product reasons.

It defines what correct output looks like.

It quietly becomes part of your operational logic.

Your prompts start to encode standards, tone, and judgment. They become institutional knowledge.

Which means losing them is not inconvenient. It is operational damage.

What Real Prompt Engineering Looks Like

A serious prompt does more than ask for an answer.

It defines who the model is supposed to be.

It defines how it should reason.

It defines what rules it must follow.

It defines what format it must return.

It defines what task it is responsible for.

There is a massive difference between:

“Write a product description.”

and

“You are a senior SaaS copywriter. Write three benefit focused product descriptions targeted at solo founders. Keep each under 120 words. Avoid buzzwords. Use clear headings. Output in markdown.”

One is a hope.

The other is a system.

Why Vaulted Prompts Exists

Once you realize that prompts are operational assets, the problem becomes obvious.

Your most valuable workflows are undocumented, unversioned, and unprotected.

Vaulted Prompts exists to treat prompt systems like the infrastructure they already are.

It gives you centralized storage, version history, structure, reuse, and team ownership. It gives your best thinking a place to live where it can evolve without disappearing into scrollback.

Your intelligence layer deserves better than a chat window.

The Rule That Saves You Years

If your prompt is longer than three lines, used more than once, or shared with someone else, it deserves a real home.

Because intelligence that cannot be reused is just a clever accident.

And accidents do not scale.