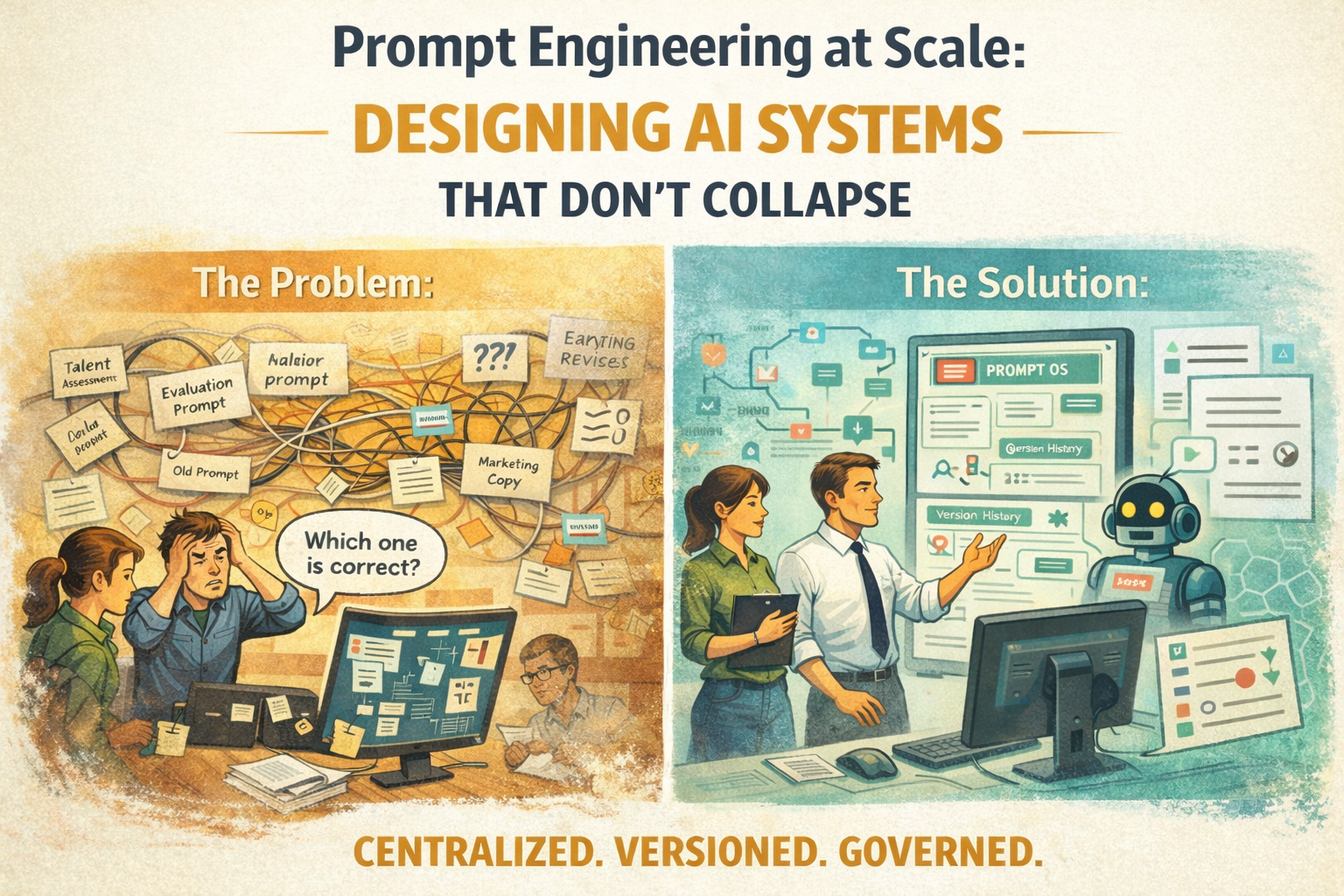

Prompt Engineering at Scale: Designing AI Systems That Do Not Collapse

The first few prompts feel clean.

The tenth feels messy.

By the twentieth, nobody is sure which one is correct.

This is how every ungoverned AI system starts.

Prompt chaos is not loud. It is quiet. It grows in the background until your AI begins making inconsistent decisions and your team quietly loses trust in it.

At that point, people start blaming the model.

They are blaming the wrong thing.

Prompt Debt Is Real

Prompt debt looks like:

- Multiple prompts that do the same task slightly differently

- Copy paste variants with no owner

- Temporary hacks that become permanent

- Nobody remembering why a rule exists

- Outputs slowly drifting in tone and quality

This debt compounds quietly. It is far harder to clean than to prevent.

Prompt as Infrastructure

At scale, prompts must be treated like software systems.

They need:

- Central ownership

- Version history

- Rollback paths

- Access control

- Searchability

- Documentation

- Structured organization

Once your prompts cross this threshold, you are no longer “using AI.”

You are running AI operations.

Vaulted Prompts as Your Prompt OS

Vaulted Prompts becomes the operating system for your intelligence layer.

It becomes the place where:

- Prompt logic lives

- Team standards are enforced

- AI behavior is governed

- Institutional knowledge is preserved

- New hires learn how your company thinks

It is not storage.

It is control.

The Warning

The biggest failure mode of AI teams is not hallucination.

It is entropy.

Without infrastructure, your prompts decay, fragment, and lose coherence.

And once trust is lost, adoption follows.

That collapse is avoidable.

But only if you build your intelligence systems like the real production infrastructure they already are.